On a recent typical day, 2.6 million people in 232 different countries, encounter newly discovered malware - a Microsoft investigation found. These attacks were comprised of 1.7 million distinct, first-seen malware and 60% of these campaigns were finished within the hour. Today, malware is on a scale that human cyber security efforts simply cannot handle, raising the question - can we use artificial intelligence (AI) to stop hackers?

This question was posed by cybersecurity experts at the Black Hat conference in Las Vegas last week and, at first, it may seem straightforward but it gets a little more complicated when you consider it more deeply. AI is often considered as being able to do anything and everything but hackers are experts at exploiting such technologies, so relying on AI too much could be playing into the hands of the people you are trying to stop say critics.

“What’s happening is a little concerning, and in some cases even dangerous,” warns Raffael Marty of human-centric cyber security firm Forcepoint, who believes that many cyber security companies are only developing AI solutions because of all the hype around the technology. He fears that this trend will result in a false sense of security and greater success for hackers, often without even being detected.

He highlights products that include “supervised learning,” where companies must choose and label the data sets that their algorithms are trained on, by tagging malware code and “clean” code for example. By using supervised learning, firms can release products quickly but inevitably roll-out information that hasn’t been thoroughly scrubbed of anomalies, leaving holes in the AI’s ability to detect threats. Furthermore, if attackers were to get access to the system they could easily switch labels, ensuring malware is seen as clean without anyone realizing.

“The bad guys don’t even need to tamper with the data,” says Martin Giles, the San Francisco bureau chief of MIT Technology Review who also attended the cybersecurity event. “Instead, they could work out the features of code that a model is using to flag malware and then remove these from their own malicious code so the algorithm doesn’t catch it.”

However, “using machine learning and AI to help automate threat detection and response can ease the burden on employees, and potentially help identify threats more efficiently than other software-driven approaches,” Giles added. It’s for this reason that the use of AI for cybersecurity persists and, if flaws can be reduced, could become the centerpiece of the fight against hackers and other malicious cyber threats.

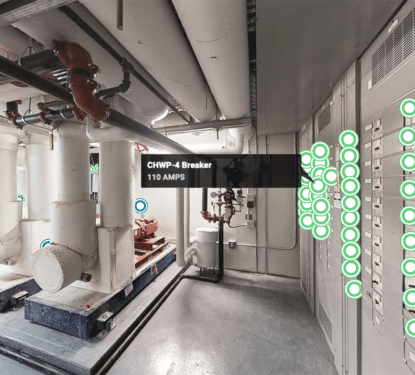

The risk of overreliance is when a single, master algorithm, is used to drive the security system, suggest Holly Stewart and Jugal Parikh of Microsoft. The danger, they say, is that if that single algorithm is compromised, there would be no backup to flag the issue, meaning the problem would be left unnoticed. Their team from Microsoft, however, have develop a solution to overcome this issue and still benefit from the automation offered by AI.

“Humans are susceptible to social engineering. Machines are susceptible to tampering. Machine learning is vulnerable to adversarial attacks,” said Stewart, Parikh, and their colleague Randy Treit during a presentation at Black Hat. The team confirmed that security researchers have even been able to successfully attack deep learning models used to classify malware to completely change their predictions by only accessing the output label of the model for the input samples fed by the attacker.

“In our layered machine learning approach, defeating one layer does not mean evading detection, as there are still opportunities to detect the attack at the next layer, albeit with an increase in time to detect,” the Microsoft speakers said. “To prevent detection of first-seen malware, an attacker would need to find a way to defeat each of the first three layers in our ML-based protection stack.”

Marty and other critics, however, still fear that is not enough. They highlight that the more complex an algorithm gets, the more difficult it is to understand decisions made by the AI. “No one really knows how the most advanced algorithms do what they do. That could be a problem,” says Will Knight, in an article entitled ‘The Dark Secret at the Heart of AI.’ For many, when it comes to protecting our physical or cyber security, that mystery could be a little too scary for comfort.